by David Black and Joseph Plazak

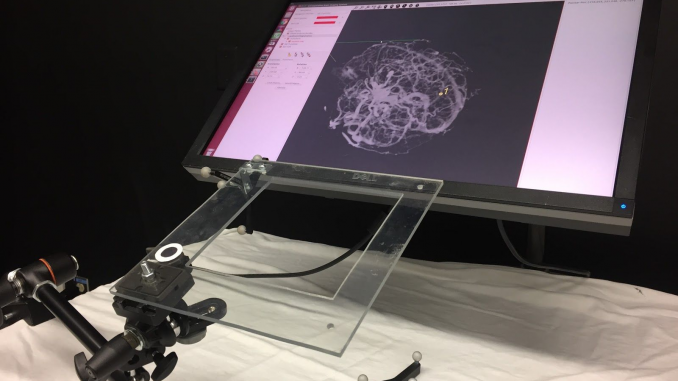

In image-guided medical procedures, information on a screen is often used to help the clinician complete a task involving the placement of a device in relation the planning data. This is often accomplished using 2d or 3d datasets. This can aid, for instance, during neurosurgery, liver tumor resection, or ablation or biopsy needle placement. However, presenting information on a screen in the operating room has certain drawbacks: clinicians need to constantly switch their view between patients and the display, and might not be aware of important changes to the clinician situation when looking at the screen.

The field of auditory display has begun to attract attention as a useful way of delivering information from image-guided systems to clinicians with the aim of improving the shortcomings of purely visual navigation. Similar to visual display, auditory display uses sound instead of visualizations to transmit information to the user. We use auditory display in everyday life, for instance, in the form of alarm clocks, car parking aids, etc. Auditory display in image-guided interventions is certainly promising: the relatively free auditory perception channel can offload reliance on the visual display by transmitting information without line of sight to a screen and deliver near-instant notifications. In addition, processing and generating audio in real-time is fast and efficient compared to the computing power required by many visualization programs. Even decades-old machines are usually capable of synthesis for auditory display, making retrofitting older navigation systems potentially simple.

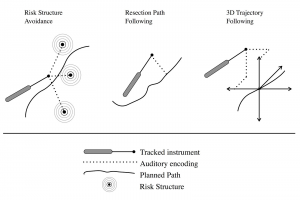

Despite auditory display’s potential as a meaningful way of providing intraoperative information, until recently, it has only been sparsely investigated, see this review. Supported tasks have included needle placement, temporal bone drilling, and lesion resection. Common auditory display mapping methods include distance to risk structures, distance to a resection line, or distance to a 3D trajectory (see figure 1).

Despite auditory display’s potential as a meaningful way of providing intraoperative information, until recently, it has only been sparsely investigated, see this review. Supported tasks have included needle placement, temporal bone drilling, and lesion resection. Common auditory display mapping methods include distance to risk structures, distance to a resection line, or distance to a 3D trajectory (see figure 1).

Although investigation into auditory display has only scratched the surface, intensified efforts are being made to help determine the clinical cases in which auditory display is most feasible, and how to best implement it. Previous attempts have lacked the interdisciplinarity between clinicians, sound designers, and medical engineering experts to make substantial progress in exploiting the advantages of auditory display in medicine. In Bremen, Germany, and Montreal, Canada, two groups are at the forefront of developing new ways of applying sound in the OR.

In Bremen, a new research group called the Creative Unit: Intra-Operative Information at the University of Bremen together with Fraunhofer Institute for Medical Image Computing MEVIS in Bremen have achieved a level of interdisciplinarity that has produced some promising results. Sound designers, clinicians, and visualization experts have been brought together in this unique workgroup. One study performed at the Robert Bosch Hospital in Stuttgart investigated auditory display for resection line marking for liver surgery: the average time spent looking at the navigation screen was reduced from 90% to 4% and average line following accuracy improved from 1.4 mm to 0.6 mm using a combined audiovisual display (see video 1 below). In another study for needle placement completed with the Computer Assisted Surgery Group at the Otto-von-Guericke University in Magdeburg, Germany, results were the first to show that a completely screen-free (blind) placement of a needle is possible, although due to the novelty of using auditory display, task time was increased. For a quick peek into some of the methods currently under investigation (see video 2).

In Bremen, a new research group called the Creative Unit: Intra-Operative Information at the University of Bremen together with Fraunhofer Institute for Medical Image Computing MEVIS in Bremen have achieved a level of interdisciplinarity that has produced some promising results. Sound designers, clinicians, and visualization experts have been brought together in this unique workgroup. One study performed at the Robert Bosch Hospital in Stuttgart investigated auditory display for resection line marking for liver surgery: the average time spent looking at the navigation screen was reduced from 90% to 4% and average line following accuracy improved from 1.4 mm to 0.6 mm using a combined audiovisual display (see video 1 below). In another study for needle placement completed with the Computer Assisted Surgery Group at the Otto-von-Guericke University in Magdeburg, Germany, results were the first to show that a completely screen-free (blind) placement of a needle is possible, although due to the novelty of using auditory display, task time was increased. For a quick peek into some of the methods currently under investigation (see video 2).

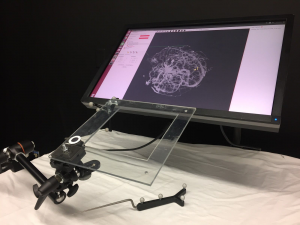

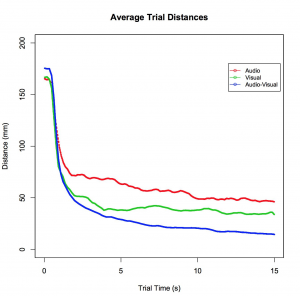

At Concordia University, the Applied Perception Lab, headed by Dr. Marta Kersten-Oertel in collaboration with Dr. Louis Collins’ NIST Lab, Montreal Neurological Institute/McGill University, is investigating the use of auditory display within neurosurgery. Their recent research has shown that using auditory display to compensate for a lack of distance information in 2D visualizations can improve the accuracy and efficiency of 3D surgical tasks, such as locating a tumor or aneurysm (see comparison of audio, visual, and combined displays in Figure 3). In addition to quantifying the performance gains associated with auditory display within image-guided neurosurgery, the team is also investigating the vast number of potential auditory signals that can be used to carry real-time information, and recently discovered participant’s proclivity for using signal-to-noise manipulations to navigate along a predetermined path. The Applied Perception Lab is currently developing a series of standardized tests for measuring the utility of a given auditory display, which include incorporates objective, subjective, and physiological measurements.

At Concordia University, the Applied Perception Lab, headed by Dr. Marta Kersten-Oertel in collaboration with Dr. Louis Collins’ NIST Lab, Montreal Neurological Institute/McGill University, is investigating the use of auditory display within neurosurgery. Their recent research has shown that using auditory display to compensate for a lack of distance information in 2D visualizations can improve the accuracy and efficiency of 3D surgical tasks, such as locating a tumor or aneurysm (see comparison of audio, visual, and combined displays in Figure 3). In addition to quantifying the performance gains associated with auditory display within image-guided neurosurgery, the team is also investigating the vast number of potential auditory signals that can be used to carry real-time information, and recently discovered participant’s proclivity for using signal-to-noise manipulations to navigate along a predetermined path. The Applied Perception Lab is currently developing a series of standardized tests for measuring the utility of a given auditory display, which include incorporates objective, subjective, and physiological measurements.

Challenges / Future

As audio interfaces increase in popularity (including a new generation of devices from Apple, Amazon, Google, etc.), it is likely that the efficacy of using auditory display within a wide range of medical tasks will only become more apparent. While some might caution that hospital environments are already too noisy or acoustically complex to introduce further auditory streams, well designed and tested display systems offer a potential solution to this sense of information overload. In particular, there is a true to need to understand the interplay between audio and visual displays (and potentially other modalities such as haptic display) in order to optimize the presentation of information in a variety of contexts, including healthcare and beyond.

As audio interfaces increase in popularity (including a new generation of devices from Apple, Amazon, Google, etc.), it is likely that the efficacy of using auditory display within a wide range of medical tasks will only become more apparent. While some might caution that hospital environments are already too noisy or acoustically complex to introduce further auditory streams, well designed and tested display systems offer a potential solution to this sense of information overload. In particular, there is a true to need to understand the interplay between audio and visual displays (and potentially other modalities such as haptic display) in order to optimize the presentation of information in a variety of contexts, including healthcare and beyond.

The two working groups in Bremen and Montreal have only begun to scratch the surface of possibilities using auditory display in the OR. Future investigations must include an emphasis on the comparison of various methods as well as studies that attempt to bring established prototypes into real clinical scenarios for evaluation. Interaction with clinicians is of utmost importance because only then can truly suitable intraoperative uses cases be discovered. Evangelizing the power and accuracy of auditory displays to clinicians must not be forgotten: potential users should be aware that sound doesn’t have to be annoying or a source of frustration, but rather a flexible tool that can offload reliance on screens to help complete a variety of tasks that have previously only been able to be accomplished with visual displays.

—

David Black is a researcher in Department 1 of the University of Bremen and a member of the Medical Image Computing group and the interdepartmental Creative Unit: Intra-Operative Information. He is also a researcher at Fraunhofer MEVIS and currently a doctoral candidate at Jacobs University.

Joseph Plazak is a Senior Software Development Engineer at Avid Technology, Visiting Professor at the Schulich School of Music, McGill University and former member of the Applied Perception Lab at Concordia University.

Very nice, especially the first video — this is how I park my car in reverse :-))